Bioimpacts. 2025;15:30419.

doi: 10.34172/bi.30419

Original Article

A new method for early diagnosis and treatment of meniscus injury of knee joint in student physical fitness tests based on deep learning method

Yan Fang Conceptualization, Investigation, Methodology, Resources, Validation, Visualization, Writing – original draft, 1

Lu Liu Conceptualization, Project administration, Resources, Supervision, Writing – review & editing, 1, *

Qingyu Yang Conceptualization, Investigation, Methodology, Validation, Visualization, Writing – original draft, 2

Shuang Hao Investigation, Writing – original draft, 2

Zhihai Luo Resources, Writing – review & editing, 3

Author information:

1Chengdu University of Information Technology, Chengdu City 610225, China

2School of Physical Education and Health, Chengdu University of Traditional Chinese Medicine, Chengdu 611137, China

3Chengdu Jinchen Technology Co., Ltd., Chengdu 611137, China

Abstract

Introduction:

Meniscus injuries in athletes' knee joints not only hinder performance but also pose substantial challenges in timely diagnosis and effective treatment. Delayed or inaccurate diagnosis often leads to prolonged recovery periods, exacerbating athletes' discomfort and compromising their ability to return to peak performance levels. Therefore, the accurate and timely diagnosis of meniscus injuries is crucial for athletes to receive appropriate treatment promptly and resume their training regimen effectively.

Methods:

This paper presents a multi-step approach for diagnosing meniscus injuries through segmentation of images into lesions regions, followed by a combined classification method. The present study employs a method whereby image noise is first reduced, followed by the implementation of an enhanced iteration of the U-Net algorithm to perform image segmentation and identify regions of interest for potential injury detection.

Results:

In the context of diagnosing injury images, the extraction of features was accomplished through the utilization of the contour line method. Furthermore, the identification of injury types was facilitated through the application of the ensemble method, employing the principles of basic category-based voting. The method under consideration has been subjected to evaluation using a well-recognized dataset comprising MRI images knee joint injuries.

Conclusion:

The findings reveal that the efficacy of the proposed approach exhibits a significant enhancement in contrast to the newly developed techniques.

Keywords: Meniscus injury, Athletes’ knee joint, Improved U-Net, Multistage classification, Ensemble classification

Copyright and License Information

© 2025 The Author(s).

This work is published by BioImpacts as an open access article distributed under the terms of the Creative Commons Attribution Non-Commercial License (

http://creativecommons.org/licenses/by-nc/4.0/). Non-commercial uses of the work are permitted, provided the original work is properly cited.

Funding Statement

Not applicable.

Introduction

The knee is an important part of humans’ body, and detecting its injuries is vital for it can crucially impact the quality of athletes’ life. The knee is a complicated joint significant in helping the body and letting for a series of movements important for everyday activities. Unluckily, knee injuries are normal and may importantly affect athletes’ quality of life.1,2 To obtain rapid reaction speed and movement speed, athletes need the explosive strength of lower limbs, that make lots of local load on the knee joint, easy to cause a frequent knee harm.1 Well-timed diagnosis of meniscus injury is important for avoiding knee joint disorder and growing patient development since it reduces unwholesomeness and enhances healing chart.3

From a clinical view, Magnetic resonance imaging (MRI) of the knee gives in eminent diagnostic exactness for many osseous and soft tissue injuries, although preventing the urge for diagnostic arthroscopy in various cases.4 There is a great amount of works in the knee bone MRI finding. Many scholars are working hard applying machine learning and deep learning methods to detect the illness via MRI in new ways.5 MRI can assess all joint structures including meniscus that can be sources of pain in athletes’ knee joint in physical fitness.6

To gain this goal, machine learning algorithms newly appeared as a bright direction which can aid to make automated tools help radiologists in their activity. Machine learning is a multifaceted which is applied in various application area, like computer vision, natural language processing, and medical domains, which transform images into organized and half-organized data, applying algorithms which mechanically grow due to experience. It is usually taken into consideration a subfield of artificial intelligence (AI), that makes mathematical models and gains knowledge on generalization patterns from input data, that are later applied to make predicts on unexperienced cases. According to these features of machine learning, great amount of research in the literature considered the application of this type of algorithms for medical imaging in various uses, such as classifying skin cancer, diabetic retinography, lung noodle diagnosis, and so on. Plus, these uses, machine learning models built their way into knee MRI. AI has also contributed to new advanced treatments of meniscus injuries.7 The work1 proposed a number of knee joint injury keep track of models on the basis of deep learning, train and assess the model's effectiveness.

In the study of Bien et al,8 the writers recommended a way according to learning algorithms to detect different knee injuries applying MRIs. Specially, they count on convolutional neural network (CNN),9 that are machine learning algorithms used to assay and draw information from images, to model and draw significant designs from knee MRIs which can connect to the sort of injury. Additionally, in the study of Astuto et al,10 3D CNNs were developed to detect the regions of interest within MRI research and grade abnormalities in the cartilage, bone marrow and so on.1

Scholars have done many investigations applying the MRI to gain the most suitable and faster methods to measure the knee structures, that contain the segmentation of second MRI slices plus the restriction of the evaluation to limited scopes of those structures. Although, these methods were not adequately strong to be applied in clinical experimentations, specifically in finding tiny changes in the structure. Direct segmentation of cartilage without bone recognition is harder because of the convolution of its structure. Although, to make it less difficult, the innovation of detecting the bone first can be employed as the first step of segmenting the other structures like bone marrow and cartilage.11

Deep learning methods have fairly well considered analytical issues in the vision and audio fields because they are realized for their ability to draw high-level features. Deep learning methods can make great segmentation and classification outcomes since they have the ability to gain knowledge directly from the raw eminent dimensional input and draw its features layer by layer. These days, the interest in deep learning applications using medical images has been grown. CNNs has showed high performance in resolving various medical image segmentation issues and gained acceptable outcomes for various segmentation tasks. Among the various deep learning models, U-Net was grown for the segmentation of neuron structure in microscopy images. Its complex network has a prominent u-shaped architecture. The U-Net won the IEEE's International Symposium on Biomedical Imaging (ISBI)challenge because it made quick and exact segmentation outcomes. U-Net was chosen by different studies on medical image analysis due to its tolerance with small datasets and the capability to build vigorous and exact segmentation consequences.11

In literature, most studies were around the following topics: Detection, segmentation, evaluation, prediction, and review on deep learning MRI. In the area of detection, deep learning MRI for automated knee injury diagnosis was analyzed in 2023. In another study, a machine learning approach for knee injury detection from MRI was recommended.1,3,5,6,11-16 Another studies were about deep learning segmentation of knee anatomy.7,11,17-21 Rajamohan et al22 made a risk evaluation model to predict total knee replacement (TKR) applying baseline clinical risk factors. Deep learning models were built to predict TKR applying baseline knee radiographs and MRI. In 2022, the generalizability of deep learning-based models was evaluated by arranging pre-trained models on independent datasets.23 The work24 automated bone age evaluation from knee joint was suggested by blending deep learning and MRI-based radiomics. Paul et al25 studied about knee injuries. The goals of this research were to develop and test the performance of a deep learning system. In the study of Astuto et al,26 it was studied to test the hypothesis that artificial intelligence methods is able to help detecting and evaluating lesion severity in the cartilage, bone marrow, meniscus, and so on in the knee, growing overall MRI interreader approval.27 presented a fully automatic method for segmentation and subregional evaluation of articular cartilage, and assess its predictive power in context of radiographic osteoarthritis development.4,28,29 reviewed AI for MRI diagnosis of joints. Alexopoulos et al30 predicted MRI-based knee. The aim of Mauer et al31 was to improve a method according to machine learning for the three-dimensional magnetic resonance images of the knee. Tolpadi et al32 proposed a deep learning which leverages MRI and clinical information to predict TKR.

The method being proposed comprises several distinctive attributes:

-

The utilization of a deep architecture rooted in U-Net obviates the requirement for a dedicated feature extraction stage in the process of obtaining segmentation features.

-

The enhanced U-Net framework demonstrates applicability in the realm of medical imaging, where persistent obstacles associated with low-quality images pose a pressing concern. Such a promising performance is attributed to its efficacy in operating with limited data sets.

-

A two-pronged approach to image analysis is proposed, whereby the detection of healthy and unhealthy images is suggested to be carried out independently prior to the labeling procedure based on the injury type. As a consequence of the notable performance exhibited by the proposed method during the segmentation stage, there has been an observable enhancement in the overall performance.

The rest of the work is arranged as follows. First, we explained our described the proposed method that involved the modified structure of U-Net. Then we provide the results of the method in the datasets. Finally, a conclusion is provided.

Methods

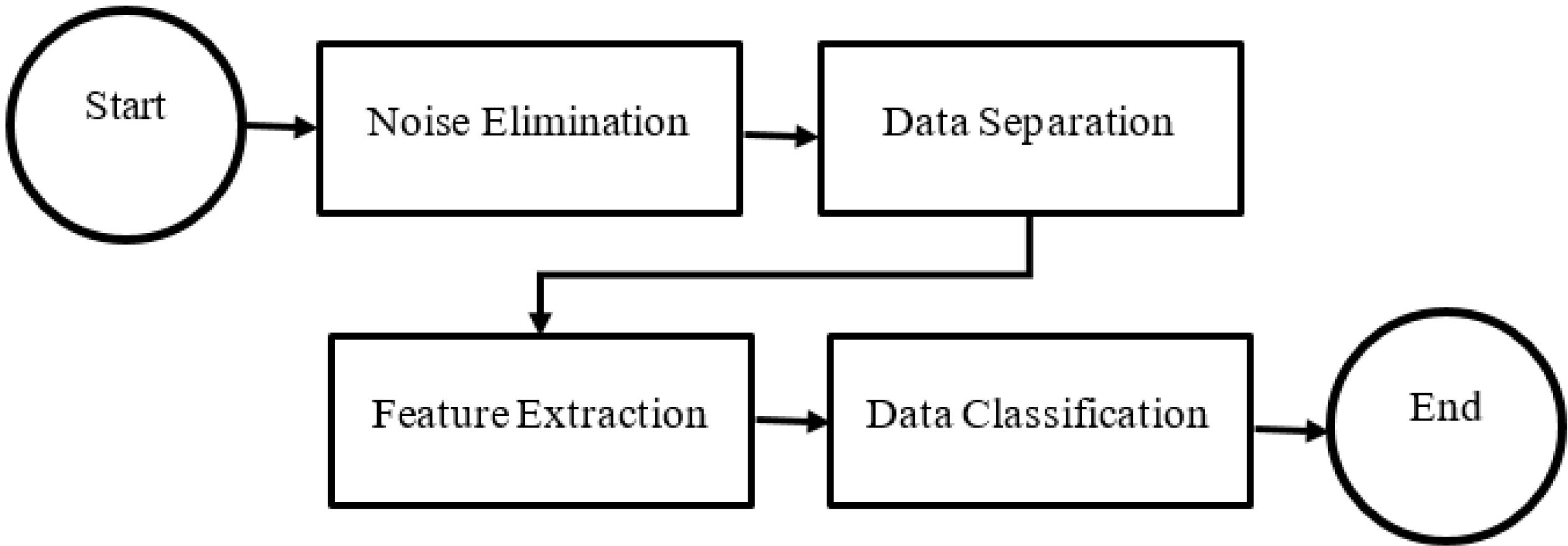

This section presents the proposed methodology for the identification and classification of meniscus injury of athletes’ knee joint, as depicted in Fig. 1. The proposed algorithm encompasses four fundamental stages, which are enumerated as follows:

-

The initial stage of the proposed method for classification involves addressing potential inaccuracies arising from noise and mixing of MRI data obtained from athletes. Thus, it is essential to employ effective noise removal techniques to mitigate such issues and reinforce the accuracy of the system. Specifically, the application of noise removal methods on the images obtained from athletes’ holds promise for effectively reducing the prevailing noise levels.

-

The second stage involves a process of segregating data into different categories, which is accomplished through the utilization of a mask derived from the refined U-Net method.

-

The third step pertains to the extraction of features from data masks through contour analysis.

-

The fourth step entails the classification of data through the utilization of machine learning by means of the voting method, commonly referred to as the Voting Classifier.

Fig. 1.

An overview of the steps of the proposed method

.

An overview of the steps of the proposed method

First stage, noise removal

The field of medical engineering encounters a common issue where images obtained from imaging devices, akin to other forms of signals, intermittently comprise extraneous distortions commonly known as noise. This distortion poses noteworthy challenges for image processing algorithms. Auditory disturbances are generally attributable to various factors including technical issues during imaging processes, environmental factors, and moments of motion or vibration by athletes’. The present study involves the application of the Gaussian blur filter for the purpose of noise reduction. In this specific filtering technique, the image is subjected to a convolution operation utilizing a Gaussian kernel. In this particular filtering technique, the intended output for the central pixel is achieved through the convolution operation that is performed between each point and the input kernel. The values of the pixels within the designated window are subsequently aggregated by summation. The principal pixel exhibits the utmost significance, and the weightage associated with each pixel in the kernel experiences a gradual decline as one moves farther from the central pixel.

Second stage, separation of different images

In this section, a phase of separation is executed to partition the data. As previously stated, the dataset employed in the analysis of meniscus injury of athletes’ knee joint images comprises different categories, namely, Meniscus, normal, and Synovial capsule. In the present study, it is observed that the proposed methodology entails the classification of data into two distinct categories. A set of standard data that are devoid of Meniscus features and a set of anomalous data comprising of Meniscus, and Synovial capsule instances. Indeed, the principal objective of the present phase is to identify data that conforms to expected standards. An injury detection approach employing an enhanced U-Net network for segmentation was utilized to recognize regions pertinent to injury. The present images are classified according to the presence or absence of an injury. Specifically, an area that lacks an injury is designated as a healthy image, while an image displaying the presence of an injury is categorized as an unhealthy image. In the subsequent discussion, we shall expound upon the U-Net enhancement of the interlacing technique employed in this particular context.

U-Net model

In recent times, the employment of deep neural networks has consistently garnered the interest of scholars. The effective training of such networks necessitates a substantial quantity of labeled training data, numbering in the thousands. The scarcity of such extensive datasets has persistently posed a significant obstacle in the field of medical engineering. The utilization of such networks has encountered the challenge of inadequate and deficient training. The U-Net algorithm has endeavored to address the aforementioned issue by leveraging the inherent redundancies present within images to effectively train the deep neural network. One of the reasons for the recent recognition of the U-Net algorithm with regards to the identification and segmentation of image components, particularly in the field of medical image processing, is its notable precision, swift training process, and lack of requirement for extensive data sets or costly and intricate hardware.

The U-Net algorithm was developed in 2015 at the University of Freiburg, Germany, by Olaf Rene Berger and his co-authors, with the aim of enhancing the precision and efficiency of processing and learning through the utilization of convolutional networks.33 The architecture of the U-Net network is characterized by certain noteworthy features, one of which is the absence of fully connected layers, thereby reducing network complexity. The concept presented in this algorithm involves the creation of a concerted reduction path that is facilitated through the substitution of pooling layers as opposed to sampling layers. Subsequently, following the acquisition of the requisite characteristics, a consecutive progression trajectory is employed. The objective of utilizing said trajectory is to reconstitute the primary image while disseminating the contextual data within it. In summary, the U-Net network exhibits a dual expansion and contraction structure, characterized by its symmetric and identical pathways. Any lost information within the contraction path is duly reinstated within the expansion path.

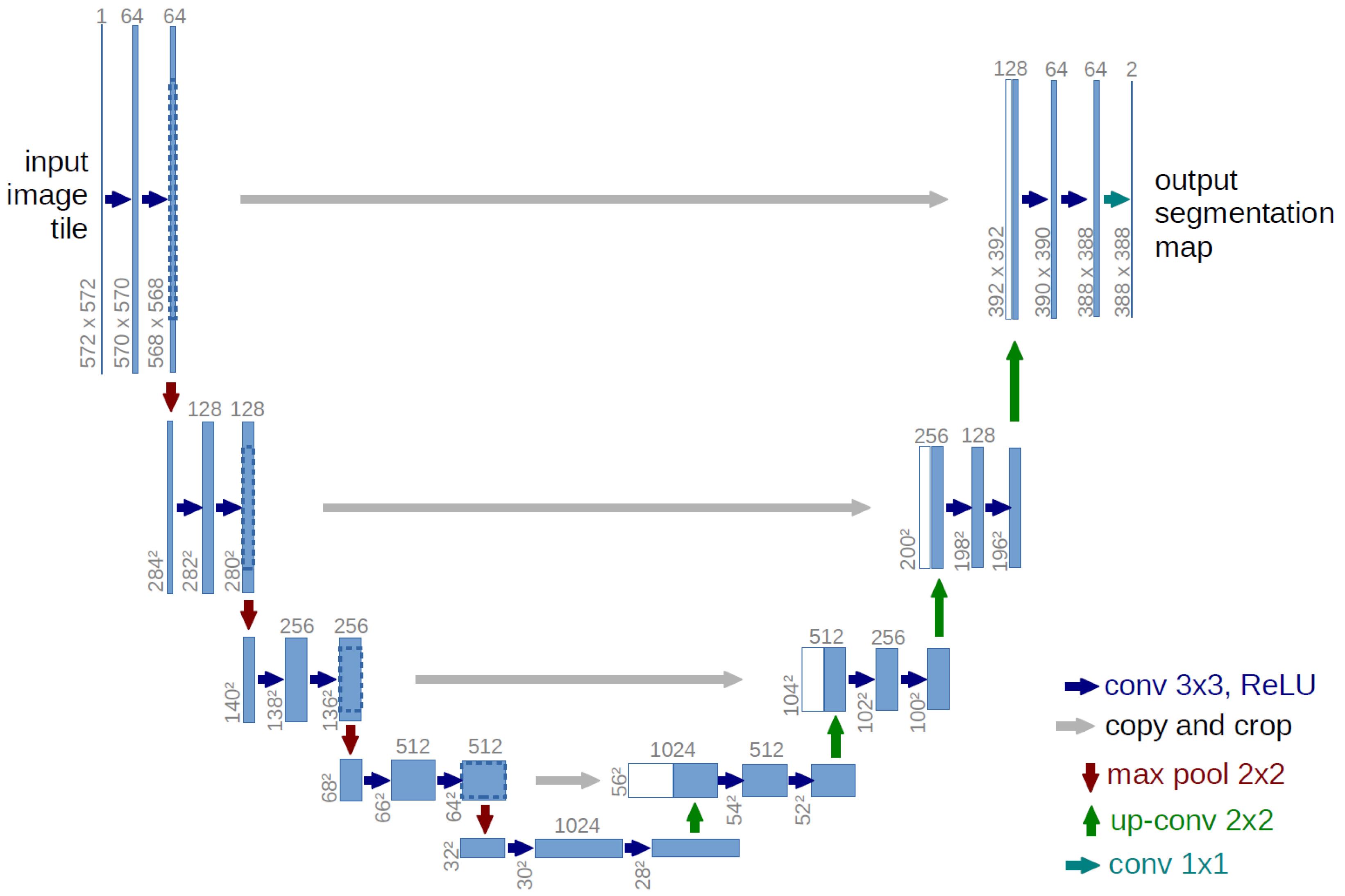

Fig. 2 illustrates the shrinkage process, which bears resemblance to a convolutional neural network. Specifically, the convolutional layer within this network operates on the input image by utilizing kernels of varying dimensions. Subsequently, the Rectified Linear Unit (Relu) activation function is invoked, followed by the utilization of the MaxPooling layer that entails a step size of 2 and a uniform filter size. The combined utilization of these three layers in a sequential manner results in downsampling. The downsampling layers increase the number of feature channels by a factor of two. The current expansion module integrates an upsampling layer, which comprises a convolutional layer of smaller dimensions that diminish the attribute channels, while additionally acquiring data from its corresponding counterpart found in the contraction module for image augmentation. It is important to note that the implications of the subject matter in question have significant consequences for individuals and society at large. Therefore, a thorough analysis and investigation of the phenomenon are crucial in order to fully understand its complexity and potential impact. Consequently, this research seeks to comprehensively examine the relevant factors and variables within the context of the issue, utilizing rigorous methodology and analysis to provide reliable and valid conclusions. The network architecture includes a size convolution layer that employs the ReLU activation function. In the final layer of the network architecture, a convolutional layer is employed to allocate the 64 feature vectors to a specific target class. The U-Net network is comprised of a total of 23 convolutional layers.

Fig. 2.

Typical U-Net neural network architecture.

.

Typical U-Net neural network architecture.

The energy function of the network is determined utilizing the Softmax function in conjunction with the cross-entropy cost function and subsequently applied to the output map. The soft-max is defined as

(1)

Here, ak (x) represents the activation in feature channel 𝑘at pixel position 𝑥∈Ω, where Ω ⊂ Z2 denotes the number of classes, and pk (x) stands for the approximated maximum-function. Subsequently, the cross-entropy function penalizes the deviation of pl (x) from 1 at each position.

Here, l: Ω→{1,…,𝐾} represents the true label of each pixel, and 𝑤:Ω→R is a weight map introduced to assign higher importance to certain pixels during training.

The optimization approach employed in this study is a stochastic gradient descent technique with a diminishing learning rate. In order to optimize the performance of the neural network, a high momentum value of 0.99 is recommended, as this enables the network to advantageously utilize its previous experience during the updating process. One key aspect that requires careful consideration is the initialization of both the network and its respective layers. Insufficient attention to this process could lead to imbalanced network activation, wherein certain areas of the network may be excessively stimulated and others may not be activated at all, ultimately resulting in suboptimal computational performance.33

When presented with limited training data, the employment of the data redundancy technique in U-Net proves to be a highly effective strategy for network training. The technique of data redundancy can enable a series of modifications to be made to the initial image, including but not limited to image rotation, angular rotation, image brightness and darkness, resizing, adding noise and blurring. Consequently, a multitude of new images can be generated and utilized for the purpose of training. An illustration of this can be seen through the utilization of the Dropout layer, where alterations are made to the input during the final stages of the contraction path. The utilization of the U-Net algorithm is characterized by the application of diverse forms of data redundancy. This, in turn, not only offers a broad corpus of educational data that augments the learning process, but also presents a noteworthy advantage owing to its capacity to modify the texture of medical images, which is the preponderant variation encountered in clinical practice. The incorporation of redundancy into the network enhances its proficiency in recognizing various forms of texture modifications.

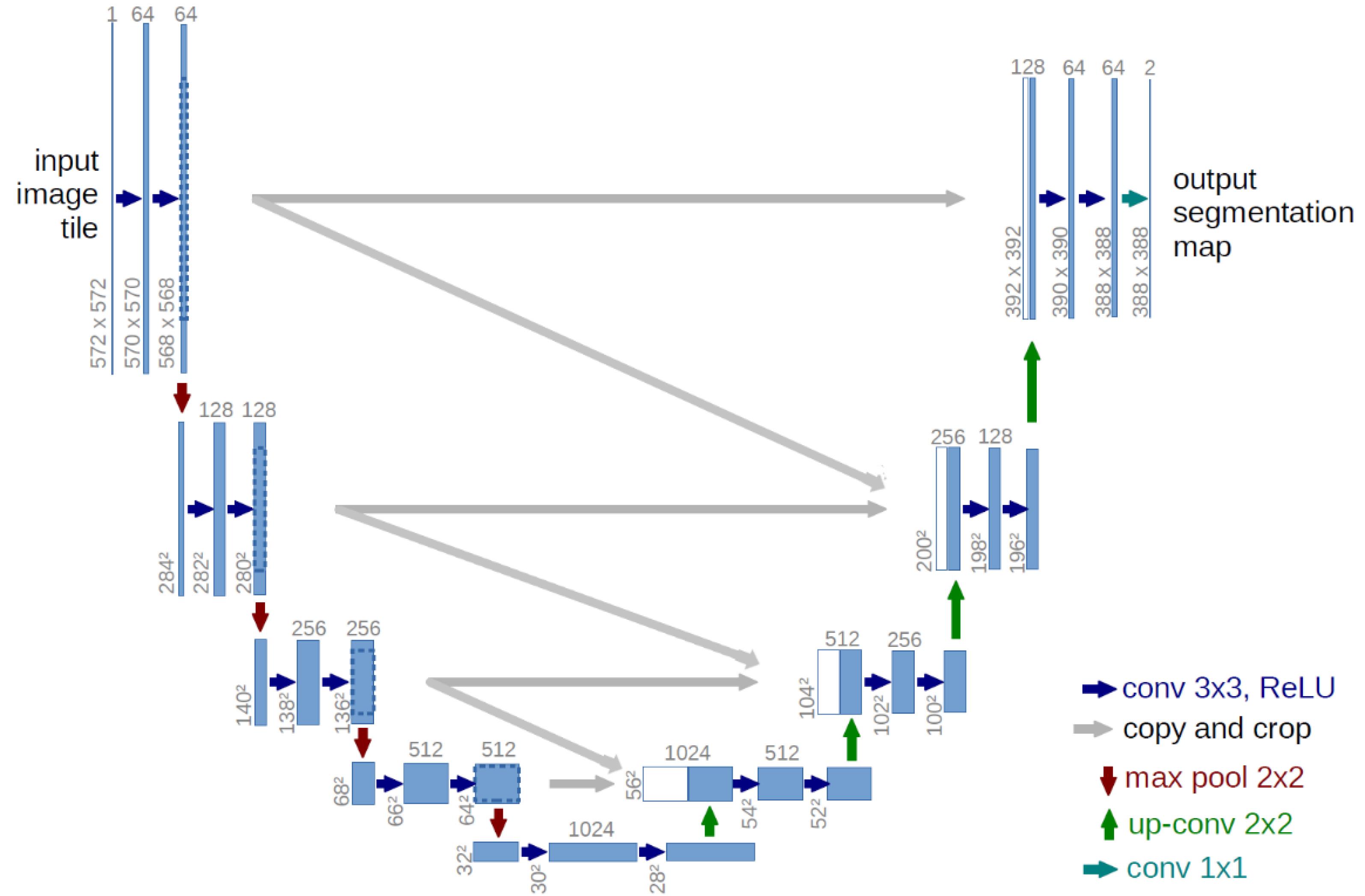

Developed U-Net model

The expansion path of a U-Net architecture typically comprises an upsampling layer accompanied by a convolutional layer that decreases the feature channels and data to be merged with the image from the corresponding layer in the contraction path. With the notable contrast that, in conjunction with the analogous category, it acquires from our antecedent course in the constricting trajectory. A comprehensive depiction of the altered architecture is depicted in Fig. 3. The implementation of this modification is intended to capitalize on the capabilities of antecedent layers by synergistically integrating their attributes into subsequent layers. The present work employs various combinations of images across diverse layers, consequently, augmenting the precision of the segmentation process. The number of features extracted in each layer in the proposed method is shown in Fig. 3. As indicated, in the last layer, there are 64 channels, with each channel having dimensions of 388*388. Therefore, the number of features in the last layer is 388*388*46.

Fig. 3.

The proposed extended U-Net.

.

The proposed extended U-Net.

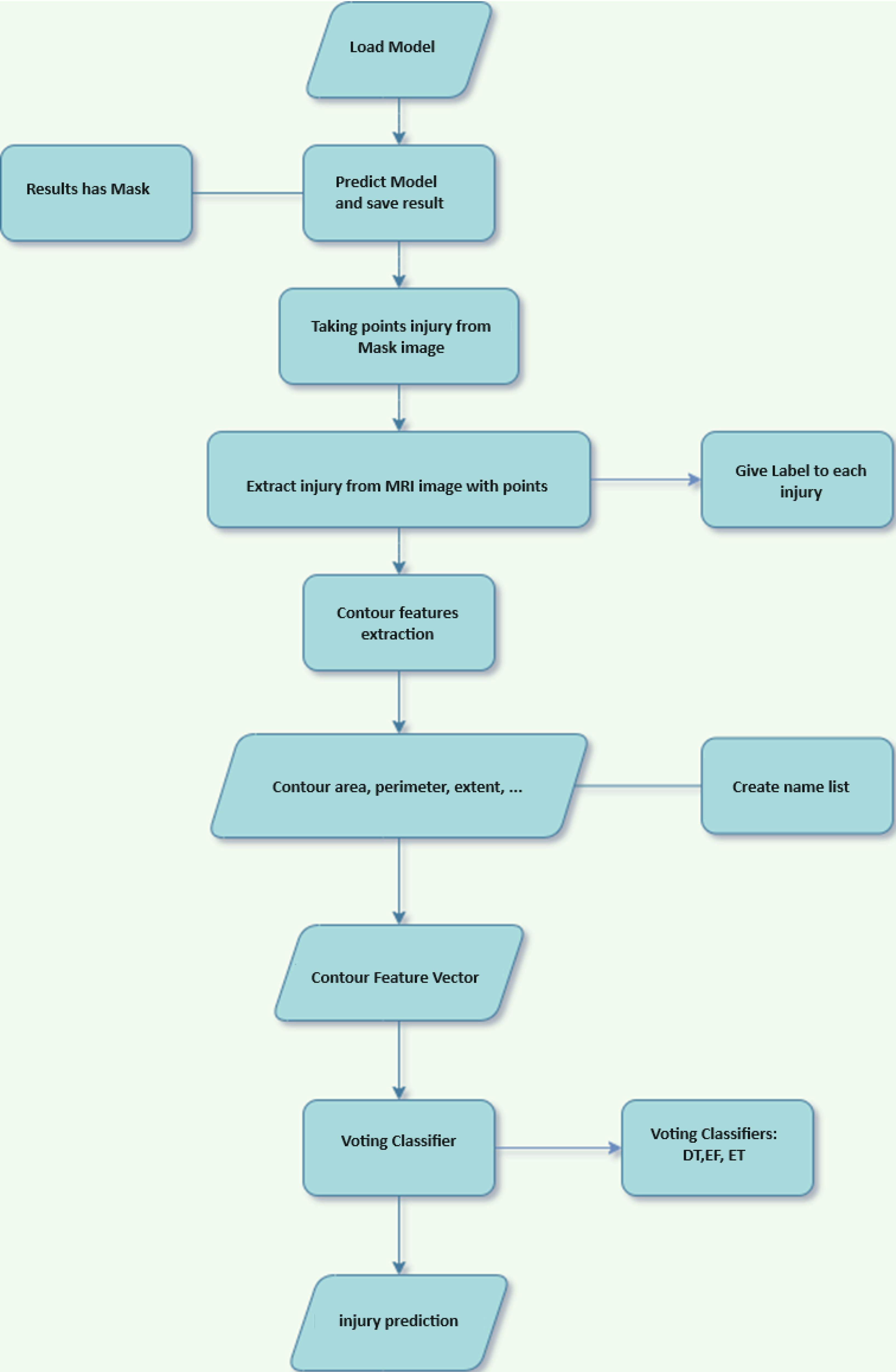

Feature extraction

In the preceding stage, images exhibiting satisfactory states of health were detected. In the present phase, detrimental visual representations are employed for the purpose of identifying the specific category of injury. The present study employs the regions identified by the proposed U-Net for the aforementioned objective. As is common knowledge, a single image may comprise multiple regions, wherein the identification of one region as an injury causes the entire image to be labeled as injury. In order to assess the regions pertaining to the results generated by U-Net, a contour-based feature extraction technique has been employed. The provided schematic depiction of the proposed methodology can be observed in Fig. 4, offering a broad overview of its key components. The choice of architecture, particularly the sequence of convolutional layers, in a deep learning model is crucial as it directly impacts the model's ability to extract relevant features from the input data. Here are some reasons for choosing a specific architecture: Feature extraction: Convolutional layers are well-suited for image data because they can effectively extract hierarchical features from input images. The sequential arrangement of convolutional layers allows the model to capture both low-level features (such as edges and textures) and high-level features (such as shapes and objects) in a hierarchical manner. Convolutional layers are typically followed by activation functions (e.g., ReLU) to introduce non-linearities into the model, enabling it to learn complex patterns and relationships within the data.

Fig. 4.

Flowchart of feature extraction and injury type classification

.

Flowchart of feature extraction and injury type classification

Contours

The identification of objects depicted in images is a crucial area of focus within machine vision, representing a significant component of the process known as image comprehension. The act of extracting boundary lines or contours from an image can be regarded as a valuable step in the process of object recognition. The majority of contour detection techniques encounter difficulties, including but not limited to noise, shifts, scale variation, and rotation in the given imagery, thereby resulting in incomplete and suboptimal contour extraction. "In order to extract the contour, a viable solution must be proposed which is invariant to various factors, including noise, object shifting, scale modifications, and rotation in the images." In the present study, Zernike moments have been employed for the purpose of extracting contour features in images.34,35 This technique is deemed effective due to its capacity to mitigate the influence of external factors such as noise, shift, scale change, and rotation.

Lines can be simply described as a curve that joins all continuous points (along the boundary) of the same color or intensity. Lines are a useful tool for shape analysis and object recognition. For better accuracy, binary images are used. Therefore, threshold or edge detection was used before finding the lines. In this article, a number of contour features were used to extract features from injuryimages. Such as: area, perimeter, width, height, as well as Extent, Solidity and Equivalent Diameter features shown in relations (3-5) have been used.

(3)

(4)

(5)

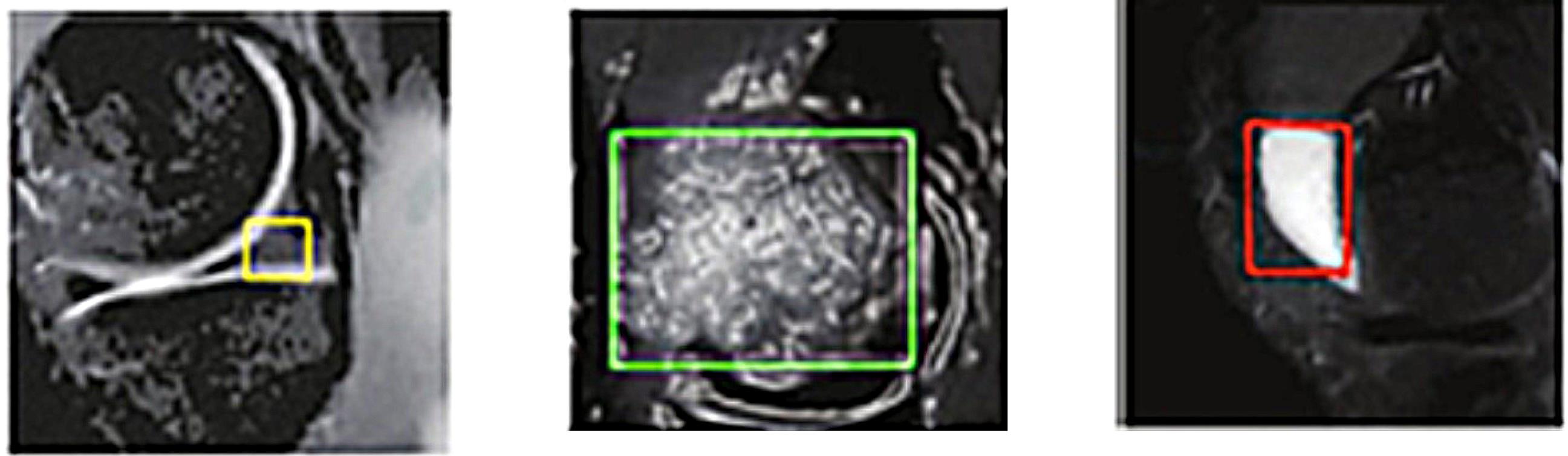

The implementation of features is driven by their ease of use, whereas the dimensions and condition of extracted regions, as potential injurycandidates, are critical factors contributing to their diagnostic significance.36 In contrast, the preceding stage involves the extraction of regions that demonstrate evidence of an injury, whereby solely these characteristics are employed in the determination of the type of injury. The presented illustration, denoted as Fig. 5, serves as an exemplar injuryregion that can be utilized for the purpose of feature extraction with the aim of determining the classification of the injury.

Fig. 5.

An example of an injury identified for feature extraction.

.

An example of an injury identified for feature extraction.

Fourth step: voting based injury type detection

In this stage, atypical images are categorized on the basis of feature extraction. A voting classifier is utilized to detect meniscus injury of athletes’ knee joint type by applying decision tree, random forest, and Extra tree classifications to the feature vectors that have been computed for each extracted contour features. In this section, the final labels of the images will be determined based on the majority vote of the three classifiers. Ensemble classifiers employ a compendious approach by utilizing the integration of multiple classifiers to enhance the accuracy of classification models. In actuality, these classifiers generate independent models based on the input data and subsequently store them for future use. Ultimately, the conclusive categorization entails convening a balloting process among the aforementioned classifications, whereby the category that garners the greatest number of votes is adjudged as the ultimate classification descriptor.

Results

In this section of the manuscript, the outcomes obtained from the proposed approach are elaborated through analytical examination. Prior to presenting the dataset and procedures for assessing and examining the findings, it is noteworthy to indicate that the application of the recommended approach was executed utilizing the Python programming language and the TensorFlow framework. The computing hardware employed in this study comprises an Intel(R) Core(TM) i7 CPU-M 620 @ 2.67GHz processor, 8.00 gigabytes of random access memory (RAM), and an NVIDIA GeForce GT 320M graphics processing unit. The hyperparameters tuning method is based on trial and error. Cross-validation has been used to evaluate the values of these hyperparameters.

Data set and evaluation criteria

This paper presents a dataset consisting of three distinct classes, namely normal, Meniscus and Synovial capsule. MRI of athletes with injury of knee joints was screened. After comparative analysis, repetitive and poor-quality data were removed, and 769 MRI was finally obtained. For each MRI, the location and type of lesions in the image were located by manual labeling. Details of the usage dataset are shown in Table 1. This dataset, compiled from various sources, investigates different injuries in athletes, encompassing diverse classes and types. This enables our model to detect a variety of injuries. In this table, the "Classes" column indicates the data class, the "Count" column specifies the number of data instances in each class, and the "Shape" column represents the size of the input images.

Table 1.

The details of the usage dataset

|

Classes

|

Count

|

Shape

|

| Meniscus |

441 |

(441, 128, 128, 1) |

| Synovial capsule |

213 |

(213, 128, 128, 1) |

| Normal |

115 |

(115, 128, 128, 1) |

In this article, 20% of the available data was considered for the test set and the rest for the training set, and 10% of the data was considered for validation.

In the following, the evaluation criteria of accuracy and loss are used to evaluate the proposed method. The relationship of calculating this criterion is as follows.

(6)

(7)

Considering the imbalance in the data across the two classes, we have used the ROC metric, and the proposed method's effectiveness has been examined. One of the metrics employed to appraise the proposed approach is the area under the receiver operating characteristic (AUC-ROC) curve. The present performance metric is predicated on diverse threshold values that are established in the context of classification-based tasks. The receiver operating characteristic (ROC) serves as a graphical representation of the probability curve, whereas the area under the curve (AUC) represents the degree of discriminability. The AUC-ROC criterion, which assesses a model's capacity to differentiate between classes, can be expressed in more fundamental terms as follows: models exhibiting higher AUC values are indicative of superior performance.

Analysis of results

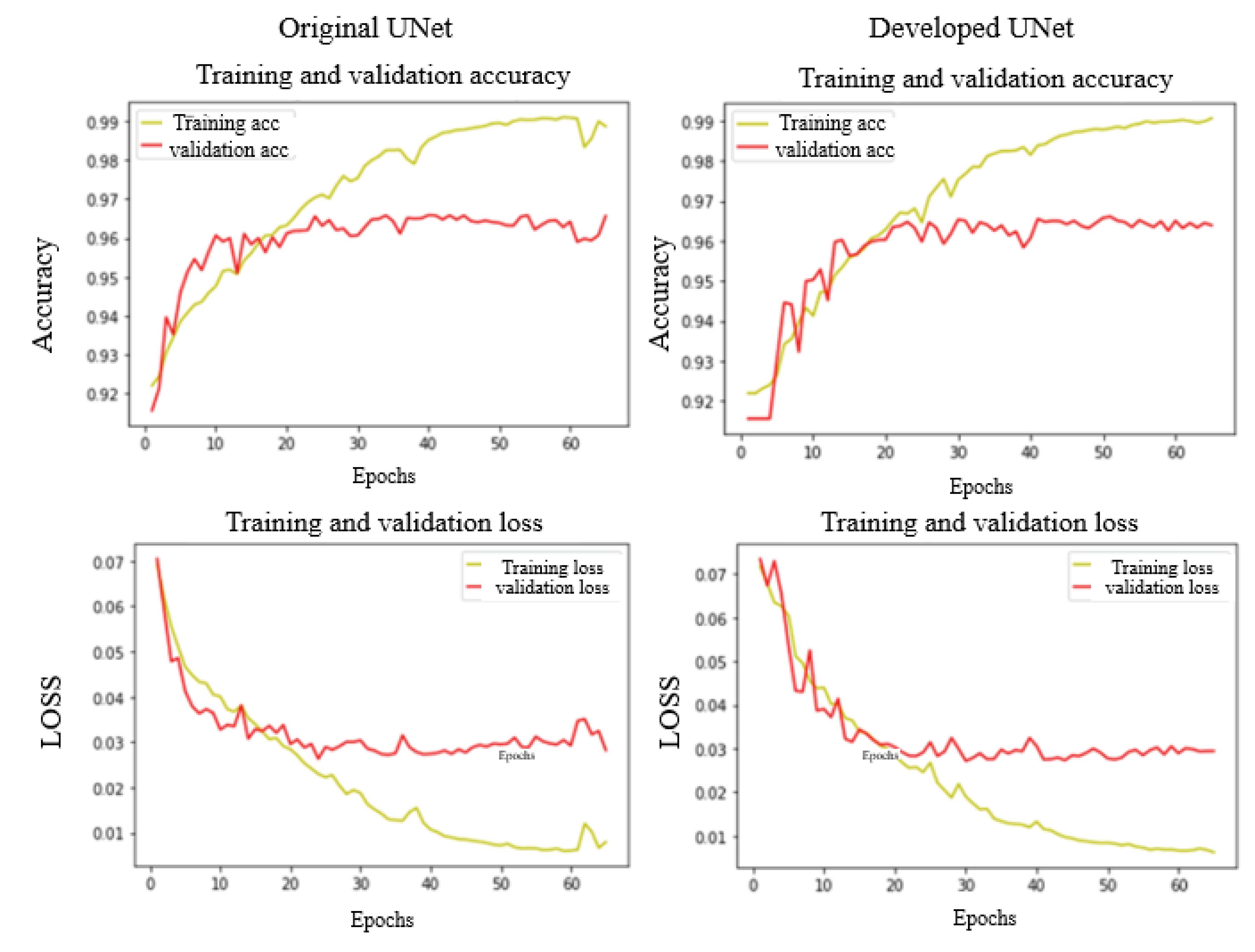

In the proposed method, the U-Net framework was initially employed as a discriminative tool to differentiate between images. Fig. 6 presents the findings regarding the efficacy of the proposed approach, employing both the conventional and advanced U-Net models, with respect to the error rate and accuracy. Table 2 shows the performance comparison of developed and Original U-Net in the test set. The proposed method demonstrates a higher detection rate compared to the Original U-Net model. This indicates that by making adjustments to the Original U-Net model, the model's learning capability has improved.

Fig. 6.

The results of training and validation based on improved and normal U-Net. The first line shows the level of accuracy in different repetitions. The second line shows the amount of loss. The first column from the left is the results of the normal U-Net method and the second column is the results of the improved U-Net method.

.

The results of training and validation based on improved and normal U-Net. The first line shows the level of accuracy in different repetitions. The second line shows the amount of loss. The first column from the left is the results of the normal U-Net method and the second column is the results of the improved U-Net method.

Table 2.

Performance comparison of developed and original U-Net in the test set

|

|

|

Accuracy

|

| Test set |

U-Net developed |

97.43 |

| U-Net original |

93.58 |

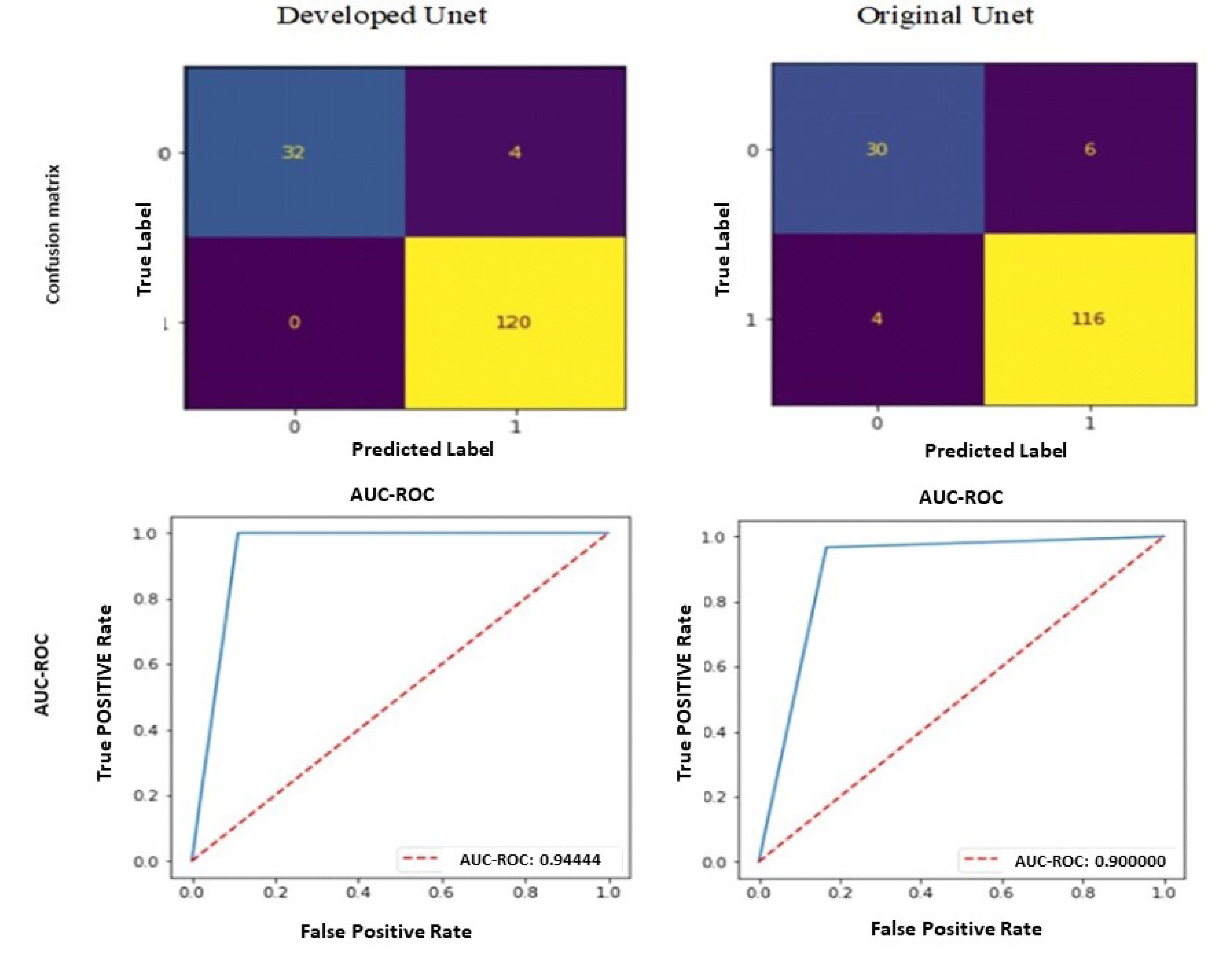

A comparison between the confusion matrix and the AUC-ROC diagram of the test stage of the two methods is depicted in Fig. 7. It is evident from the figure that the U-Net model under development has erroneously identified solely four healthy images as unhealthy. The remaining images pertinent to this classification were also correctly discerned. The significant finding in the present study pertains to the accurate identification of all injury images through diagnostic procedures. Indeed, utilizing this approach ensures the accurate identification of healthy images without incorrectly classifying any Meniscus and Synovial capsule images as normal. However, these findings are not present in the conventional U-Net approach.

Fig. 7.

Comparison of confusion matrix and AUC-ROC results in the test. The first line is the confusion matrix, in which the value 1 represents unhealthy class images and 0 represents healthy class images. The second row shows the results of the AUC-ROC criterion.

.

Comparison of confusion matrix and AUC-ROC results in the test. The first line is the confusion matrix, in which the value 1 represents unhealthy class images and 0 represents healthy class images. The second row shows the results of the AUC-ROC criterion.

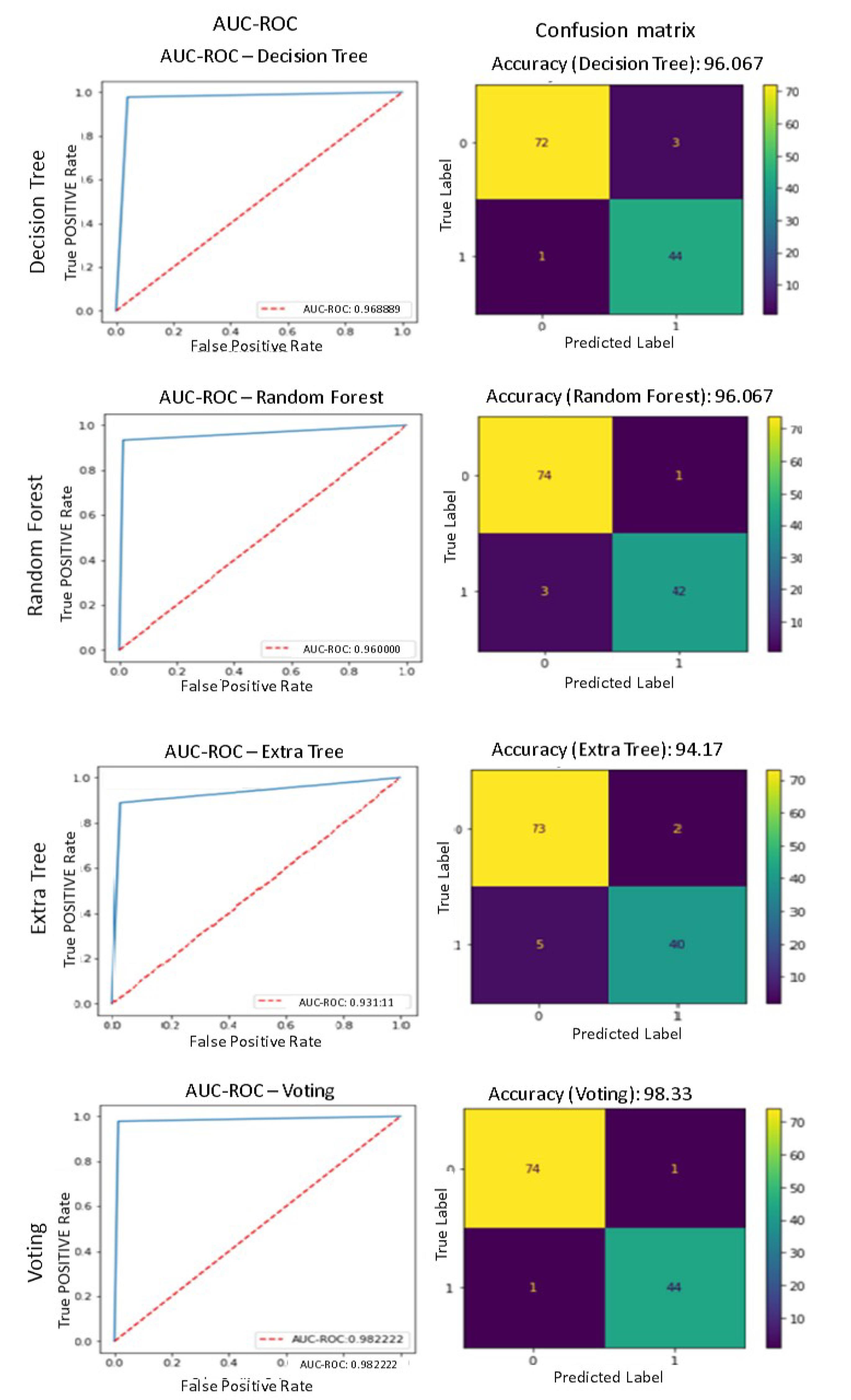

The subsequent discourse pertains to the categorization of injury type in non-healthy images. The present study employs a methodology whereby the contour feature of all regions deemed injury candidates by the optimized U-Net is initially extracted with precision. Subsequent to the training phase, a differentiation is made among three specific categories of decision tree, random forest, and Extra tree.

The present study investigates the effectiveness of a combined voting-based approach for detecting injury types in unhealthy images from the previous stage. The outcomes of this approach are then depicted, which illustrates the results obtained from three distinct categories, along with the performance of the ensemble method.

As depicted in the initial line of Fig. 8, the Decision Tree algorithm has accurately identified 72 instances of class 0 or Meniscus data, while misclassifying 3 samples of class 1 data representing Synovial capsule, alongside 44 samples correctly classified as part of class 1. Additionally, the algorithm has misclassified a single sample as belonging to class 0. The algorithm's efficacy for the classifications of Meniscus and Synovial capsule categories is 67.96% with a reasonable degree of precision.

Fig. 8.

The results obtained from three Classifier separately to injury type Detection

.

The results obtained from three Classifier separately to injury type Detection

As delineated in the second line of Fig. 8, the Random Forest algorithm has successfully identified 74 instances of Meniscus data or class 0 while erroneously categorizing one data sample as Synovial capsule or class 1. Additionally, 42 data samples were accurately recognized as class 1, while three were misclassified as class 0. The algorithm in question exhibits a comparable level of accuracy in distinguishing two classes of Meniscus and Synovial capsule as the DT method, achieving a success rate of 96.67%. Nevertheless, it is noteworthy that the algorithm's approach to diagnosing injury types differs significantly in terms of the correctness and incorrectness of the diagnosis. This indicates that the proposed method has been able to effectively classify data from two non-mutually exclusive classes. Therefore, the features extracted from the images by the proposed method are suitable.

As indicated in the third line of Fig. 8, the Extra Tree algorithm in question demonstrated accurate identification of 73 data samples belonging to class 0, but exhibited misidentification with respect to 2 data samples, correctly classified as class 1. Additionally, the algorithm showed proper identification of 40 class 1 samples, but encountered error in classifying 5 samples as class 0. The algorithm's accuracy in distinguishing between Meniscus and Synovial capsule classes is 17.94%.

The present study employed a confluence of three distinct analytical techniques, as explicated by the fourth line of Fig. 8. Specifically, the Voting algorithm correctly identified 74 instances of class 0 or Meniscus data, but regrettably erred in classifying one data sample as class 1. The analysis has identified the presence of Synovial capsule, and the classification model has accurately diagnosed 44 instances as class 1 while erroneously classifying a single sample as class 0. The algorithm's accuracy in correctly classifying Meniscus and Synovial capsule cases is 33.98%. The aforementioned approach has been found to exhibit the most favorable outcome.

The assessment of the overall precision of the proposed system is essential, as it pertains to each of the 156 images present in the test set. Regrettably, 4 of the images in the initial stage and 2 of the images in the subsequent stage were misclassified, thereby yielding an accuracy rate of 15.96% for the proposed methodology.

In the following section, an assessment of the efficacy of the proposed technique is compared with deep learning methodologies. To achieve this objective, various deep learning architectures utilizing convolutional neural networks (CNNs) have been implemented and assessed using identical datasets. The findings obtained through these techniques are displayed in Table 3. As evidenced by the findings, the proposed methodology demonstrated superior performance. Moreover, due to the data's imbalance, we encounter a challenging issue. In Table 3, the efficacy of the proposed method has been examined with other metrics like recall and accuracy, demonstrating its ability to detect various classes effectively despite the data's imbalanced distribution.

Table 3.

Comparison of the proposed method with CNN-based methods

|

Method

|

Accuracy

|

Recall

|

Precision

|

| Proposed method |

97.43 |

94.25 |

96.15 |

| EfficientNetB3 |

87.43 |

84.68 |

86.32 |

| ResNet34 |

72.12 |

67.34 |

69.32 |

| VGG16 |

68.47 |

63.89 |

66.98 |

| ResNet50 |

88.52 |

86.43 |

87.18 |

| EfficientNetB7 |

83.92 |

77.26 |

79.49 |

Conclusion

The development of a new method for early diagnosis and treatment of meniscus injury in athletes' knee joints using deep learning techniques represents a significant advancement in sports medicine and physical fitness assessment. In contemporary times, the adoption of machine learning and machine vision techniques have demonstrated strong efficacy in this domain. In the present study, a multi-stage approach is proposed that entails the implementation of noise reduction, identification of images depicting healthy and unhealthy, feature extraction that hinges on contour lines, and ensemble classification that centers on three fundamental classes, along with the voting technique. The findings demonstrate commendable efficacy of the proposed approach in relation to several other established techniques currently available in literature over the past few years. To enhance the efficiency of the proposed method, transformer models can be utilized in future works. Using transformer models in future research can offer several benefits, particularly in tasks involving sequence data such as MRI image analysis.

Research Highlights

What is the current knowledge?

What is new here?

Competing Interests

The authors declare no competing interests.

Ethics Statement

Not applicable.

References

- Mangone M, Diko A, Giuliani L, Agostini F, Paoloni M, Bernetti A. A machine learning approach for knee injury detection from magnetic resonance imaging. Int J Environ Res Public Health 2023; 20:6059. doi: 10.3390/ijerph20126059 [Crossref] [ Google Scholar]

- Xue M, Liu Y, Cai X. Automated detection model based on deep learning for knee joint motion injury due to martial arts. Comput Math Methods Med 2022; 2022:3647152. doi: 10.1155/2022/3647152 [Crossref] [ Google Scholar]

- Hung TN, Vy VP, Tri NM, Hoang LN, Tuan LV, Ho QT. Automatic detection of meniscus tears using backbone convolutional neural networks on knee MRI. J Magn Reson Imaging 2023; 57:740-9. doi: 10.1002/jmri.28284 [Crossref] [ Google Scholar]

- Fritz B, Fritz J. Artificial intelligence for MRI diagnosis of joints: a scoping review of the current state-of-the-art of deep learning-based approaches. Skeletal Radiol 2022; 51:315-29. doi: 10.1007/s00256-021-03830-8 [Crossref] [ Google Scholar]

- Awan MJ, Mohd Rahim MS, Salim N, Mohammed MA, Garcia-Zapirain B, Abdulkareem KH. Efficient detection of knee anterior cruciate ligament from magnetic resonance imaging using deep learning approach. Diagnostics (Basel) 2021; 11:105. doi: 10.3390/diagnostics11010105 [Crossref] [ Google Scholar]

- Abdullah SS, Rajasekaran MP. Automatic detection and classification of knee osteoarthritis using deep learning approach. Radiol Med 2022; 127:398-406. doi: 10.1007/s11547-022-01476-7 [Crossref] [ Google Scholar]

- Kulseng CP, Nainamalai V, Grøvik E, Geitung JT, Årøen A, Gjesdal KI. Automatic segmentation of human knee anatomy by a convolutional neural network applying a 3D MRI protocol. BMC Musculoskelet Disord 2023; 24:41. doi: 10.1186/s12891-023-06153-y [Crossref] [ Google Scholar]

- Bien N, Rajpurkar P, Ball RL, Irvin J, Park A, Jones E. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: Development and retrospective validation of MRNet. PLoS Med 2018; 15:e1002699. doi: 10.1371/journal.pmed.1002699 [Crossref] [ Google Scholar]

- Avola D, Cinque L, Diko A, Fagioli A, Foresti GL, Mecca A. MS-Faster R-CNN: multi-stream backbone for improved Faster R-CNN object detection and aerial tracking from UAV images. Remote Sens 2021; 13:1670. doi: 10.3390/rs13091670 [Crossref] [ Google Scholar]

- Astuto B, Flament I, Namiri NK, Shah R, Bharadwaj U, Link TM. Automatic deep learning-assisted detection and grading of abnormalities in knee MRI studies. Radiol Artif Intell 2021; 3:e200165. doi: 10.1148/ryai.2021200165 [Crossref] [ Google Scholar]

- Almajalid R, Zhang M, Shan J. Fully automatic knee bone detection and segmentation on three-dimensional MRI. Diagnostics (Basel) 2022; 12:123. doi: 10.3390/diagnostics12010123 [Crossref] [ Google Scholar]

- Johnson PM, Lin DJ, Zbontar J, Zitnick CL, Sriram A, Muckley M. Deep learning reconstruction enables prospectively accelerated clinical knee MRI. Radiology 2023; 307:e220425. doi: 10.1148/radiol.220425 [Crossref] [ Google Scholar]

- Schiratti JB, Dubois R, Herent P, Cahané D, Dachary J, Clozel T. A deep learning method for predicting knee osteoarthritis radiographic progression from MRI. Arthritis Res Ther 2021; 23:262. doi: 10.1186/s13075-021-02634-4 [Crossref] [ Google Scholar]

- Xiongfeng T, Yingzhi L, Xianyue S, Meng H, Bo C, Deming G. Automated detection of knee cystic lesions on magnetic resonance imaging using deep learning. Front Med (Lausanne) 2022; 9:928642. doi: 10.3389/fmed.2022.928642 [Crossref] [ Google Scholar]

- Rizk B, Brat H, Zille P, Guillin R, Pouchy C, Adam C. Meniscal lesion detection and characterization in adult knee MRI: a deep learning model approach with external validation. Phys Med 2021; 83:64-71. doi: 10.1016/j.ejmp.2021.02.010 [Crossref] [ Google Scholar]

- Iqbal I, Shahzad G, Rafiq N, Mustafa G, Ma J. Deep learning-based automated detection of human knee joint's synovial fluid from magnetic resonance images with transfer learning. IET Image Process 2020; 14:1990-8. doi: 10.1049/iet-ipr.2019.1646 [Crossref] [ Google Scholar]

- Wang Q, Yao M, Song X, Liu Y, Xing X, Chen Y. Automated segmentation and classification of knee synovitis based on MRI using deep learning. Acad Radiol 2024; 31:1518-27. doi: 10.1016/j.acra.2023.10.036 [Crossref] [ Google Scholar]

- Jonmohamadi Y, Takeda Y, Liu F, Sasazawa F, Maicas G, Crawford R. Automatic segmentation of multiple structures in knee arthroscopy using deep learning. IEEE Access 2020; 8:51853-61. doi: 10.1109/access.2020.2980025 [Crossref] [ Google Scholar]

- Kessler DA, MacKay JW, Crowe VA, Henson FM, Graves MJ, Gilbert FJ. The optimisation of deep neural networks for segmenting multiple knee joint tissues from MRIs. Comput Med Imaging Graph 2020; 86:101793. doi: 10.1016/j.compmedimag.2020.101793 [Crossref] [ Google Scholar]

- Kemnitz J, Baumgartner CF, Eckstein F, Chaudhari A, Ruhdorfer A, Wirth W. Clinical evaluation of fully automated thigh muscle and adipose tissue segmentation using a U-Net deep learning architecture in context of osteoarthritic knee pain. MAGMA 2020; 33:483-93. doi: 10.1007/s10334-019-00816-5 [Crossref] [ Google Scholar]

- Panfilov E, Tiulpin A, Nieminen MT, Saarakkala S, Casula V. Deep learning-based segmentation of knee MRI for fully automatic subregional morphological assessment of cartilage tissues: data from the osteoarthritis initiative. J Orthop Res 2022; 40:1113-24. doi: 10.1002/jor.25150 [Crossref] [ Google Scholar]

- Rajamohan HR, Wang T, Leung K, Chang G, Cho K, Kijowski R. Prediction of total knee replacement using deep learning analysis of knee MRI. Sci Rep 2023; 13:6922. doi: 10.1038/s41598-023-33934-1 [Crossref] [ Google Scholar]

- Schmidt AM, Desai AD, Watkins LE, Crowder HA, Black MS, Mazzoli V. Generalizability of deep learning segmentation algorithms for automated assessment of cartilage morphology and MRI relaxometry. J Magn Reson Imaging 2023; 57:1029-39. doi: 10.1002/jmri.28365 [Crossref] [ Google Scholar]

- Fan F, Liu H, Dai X, Liu G, Liu J, Deng X. Automated bone age assessment from knee joint by integrating deep learning and MRI-based radiomics. Int J Legal Med 2024; 138:927-38. doi: 10.1007/s00414-023-03148-1 [Crossref] [ Google Scholar]

- Yi PH, Wei J, Kim TK, Sair HI, Hui FK, Hager GD. Automated detection & classification of knee arthroplasty using deep learning. Knee 2020; 27:535-42. doi: 10.1016/j.knee.2019.11.020 [Crossref] [ Google Scholar]

- Astuto B, Flament I, Namiri NK, Shah R, Bharadwaj U, Link TM. Automatic deep learning-assisted detection and grading of abnormalities in knee MRI studies. Radiol Artif Intell 2021; 3:e200165. doi: 10.1148/ryai.2021200165 [Crossref] [ Google Scholar]

- Panfilov E, Tiulpin A, Nieminen MT, Saarakkala S, Casula V. Deep learning-based segmentation of knee MRI for fully automatic subregional morphological assessment of cartilage tissues: data from the osteoarthritis initiative. J Orthop Res 2022; 40:1113-24. doi: 10.1002/jor.25150 [Crossref] [ Google Scholar]

- Martel-Pelletier J, Paiement P, Pelletier JP. Magnetic resonance imaging assessments for knee segmentation and their use in combination with machine/deep learning as predictors of early osteoarthritis diagnosis and prognosis. Ther Adv Musculoskelet Dis 2023; 15:1759720x231165560. doi: 10.1177/1759720x231165560 [Crossref] [ Google Scholar]

- Siouras A, Moustakidis S, Giannakidis A, Chalatsis G, Liampas I, Vlychou M. Knee injury detection using deep learning on MRI studies: a systematic review. Diagnostics (Basel) 2022; 12:537. doi: 10.3390/diagnostics12020537 [Crossref] [ Google Scholar]

- Alexopoulos A, Hirvasniemi J, Klein S, Donkervoort C, Oei EHG, Tümer N. Early detection of knee osteoarthritis using deep learning on knee MRI. Osteoarthr Imaging 2023; 3:100112. doi: 10.1016/j.ostima.2023.100112 [Crossref] [ Google Scholar]

- Auf der Mauer M, Jopp-van Well E, Herrmann J, Groth M, Morlock MM, Maas R. Automated age estimation of young individuals based on 3D knee MRI using deep learning. Int J Legal Med 2021; 135:649-63. doi: 10.1007/s00414-020-02465-z [Crossref] [ Google Scholar]

- Tolpadi AA, Lee JJ, Pedoia V, Majumdar S. Deep learning predicts total knee replacement from magnetic resonance images. Sci Rep 2020; 10:6371. doi: 10.1038/s41598-020-63395-9 [Crossref] [ Google Scholar]

- Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells W, Frangi A, eds. Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015. Cham: Springer; 2015. p. 234-41. 10.1007/978-3-319-24574-4_28.

- Abbas S, Farhan S, Fahiem MA, Tauseef H. Efficient shape classification using Zernike moments and geometrical features on MPEG-7 dataset. Adv Electr Comput Eng 2019; 19:45-51. doi: 10.4316/aece.2019.01006 [Crossref] [ Google Scholar]

- Adapa D, Joseph Raj AN, Alisetti SN, Zhuang Z, K G, Naik G. A supervised blood vessel segmentation technique for digital fundus images using Zernike moment-based features. PLoS One 2020; 15:e0229831. doi: 10.1371/journal.pone.0229831 [Crossref] [ Google Scholar]

- Al-Dhabyani W, Gomaa M, Khaled H, Fahmy A. Dataset of breast ultrasound images. Data Brief 2020; 28:104863. doi: 10.1016/j.dib.2019.104863 [Crossref] [ Google Scholar]